Investigating perception of spoken dialogue acceptability through surprisal

Stimuli page for work appearing in Interspeech 2022

Abstract:

Surprisal is used throughout computational psycholinguistics to model a range of language processing behaviour. There is growing evidence that language model (LM) estimates of surprisal correlate with human performance on a range of written language comprehension tasks.Although communicative interaction is perhaps the primary form of language use, most studies of surprisal employ monological, written data. Towards the goal of understanding perception in spontaneous, natural language, we present an exploratory investigation into whether the relationship between human comprehension behaviour and LM-estimated surprisal holds when applied to dialogue, considering both written dialogue, and the lexical component of spoken dialogue. We use a novel judgement task of dialogue utterance acceptability to ask two questions. “How well can people make predictions about written dialogue and transcripts of spoken dialogue?” and “Does surprisal correlate with these acceptability judgements?”.

We demonstrate that people can make accurate predictions about upcoming dialogue and that their ability differs between spoken transcripts and written conversation. We investigate the relationship between global and local operationalisations of surprisal and human acceptability judgements, finding a combination of both to provide the most predictive power.

Stimuli:

We provide the full sets of stimuli from the Switchboard and DailyDialog corpora along with the plausibility ratings here .

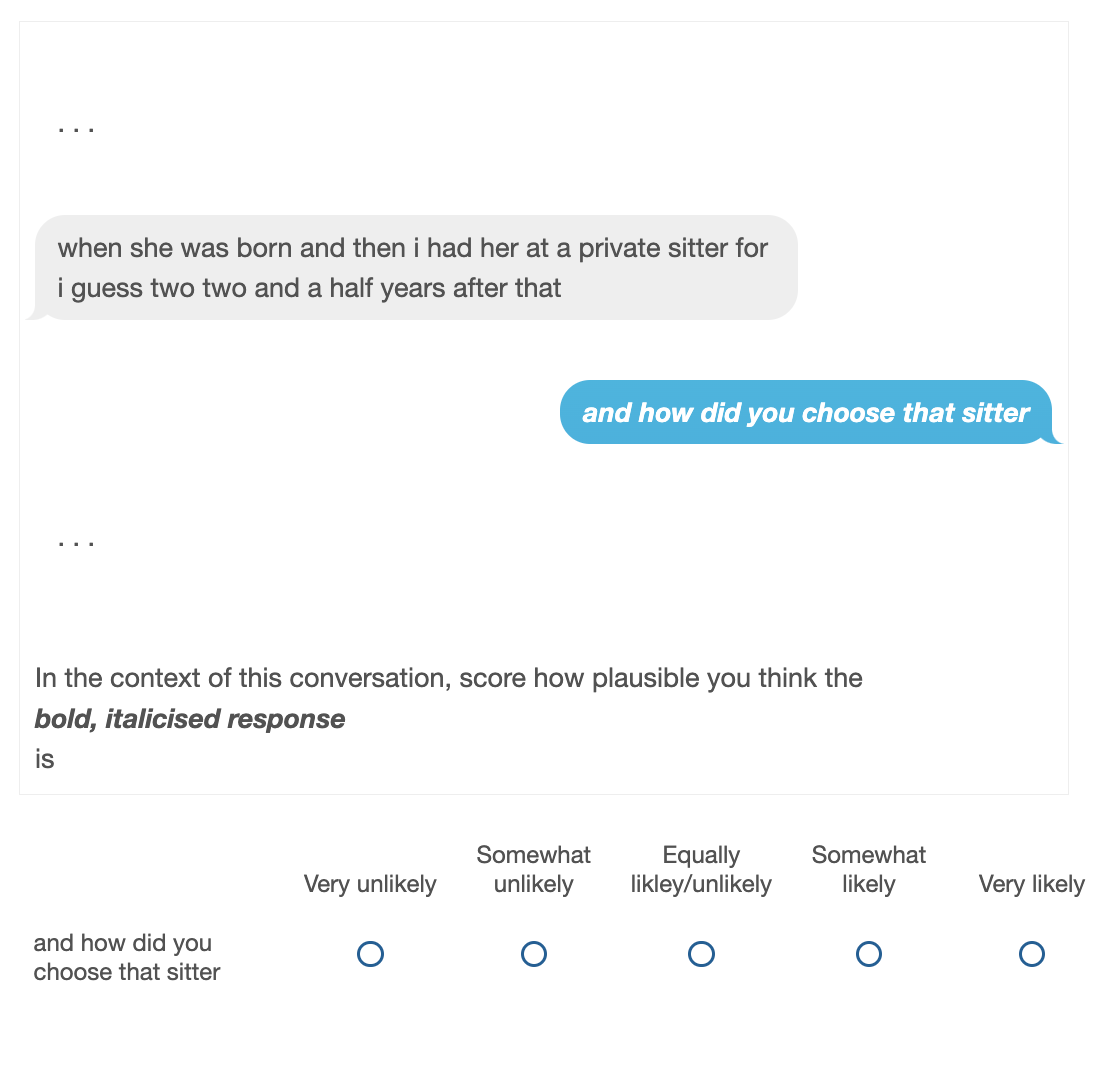

The Qualtrics survey (constructed using https://github.com/CSTR-Edinburgh/qualtreats) was presented to participants through Prolific Academic. An example of the stimuli presentation is included here: